[This post is a modified transcript of a video posted already on November 1, 2023]

Today we’re doing something different. These videos usually show me talking with limited entertainment value. I’ve been advised to mix things up, and I typically use my own photographs. My website features poetry, photography, and other content, and I’ve been an avid photography collector. However, that doesn’t work for everything.

I’ve been exploring Canva, which has a nice function for creating artificial images through their AI system called Magic Media. I’ve tried it out and will discuss the results, hopefully making this somewhat entertaining while eventually addressing serious topics.

When thinking about bias, people assume ill intent is required. But bias affects everyone because we all have influences. We all grow up in specific situations and areas, and you can measure how where we’re born and raised influences what we expect to be normal.

When artificial intelligence “grows up”—when it’s created and trained—it’s trained by human beings using collections of images with certain metadata. Let me explain these ideas. Some aren’t complicated, so you’ll probably understand quickly.

Starting with Something Innocuous

Let’s begin with an innocuous image of a kitten. I searched for “cat on the beach” on Canva. I arranged these pictures in quadruplets—Canva gives you one image but shows four options, so I’ve arranged them this way.

Some pictures look like photos, others like paintings, and they’re actually quite good. You can see how backgrounds and people are slightly blurry. Some seem more advanced. These appear to be cute cats, and this is acceptable—you could actually bring a cat to the beach for a photo. You couldn’t create the painting yourself—that’s where I’d be limited.

Let’s try something different: “cat in a spaceship.” Now, forgetting that animals shot into space often don’t survive (we all know about Laika the dog), we regularly send animals into space to study radiation effects and how lack of gravity affects their physiology.

Interesting spaceship designs appear—spaceships that fit one cat look more like helmets. The top right resembles someone’s version of the Millennium Falcon. Cats look cute, but since I didn’t enter photo-realistic mode, they appear more like drawings. We all know no cat is maneuvering a spaceship.

Let’s try “cat in a space suit in space.” Very cute cats looking as surprised as they should while floating in space. It’s not believable, but there they are.

Maybe something weirder: “kitten playing with tiger.” You could imagine this being possible. The kitten looks unafraid. The tiger’s eyes look AI-ish—something’s happening, especially in the top right corner. The ear on the right shows typical AI artifacts.

I tried something completely different: “shark playing with spider.” These are sharks playing with spiders, again as cartoons as you’d expect—something that doesn’t happen in real life.

Cultural Stereotypes Emerge

I entered “China” and received stereotypical, traditional depictions of what we might imagine Chinese culture looked like before the Cultural Revolution. The computer appears romantic, producing dreamy-looking pictures that are actually quite beautiful. I don’t know how true to Chinese culture they are—I’ve never been to China, so I can’t judge what I’m seeing. However, I suspect it’s an accumulation of stereotypes.

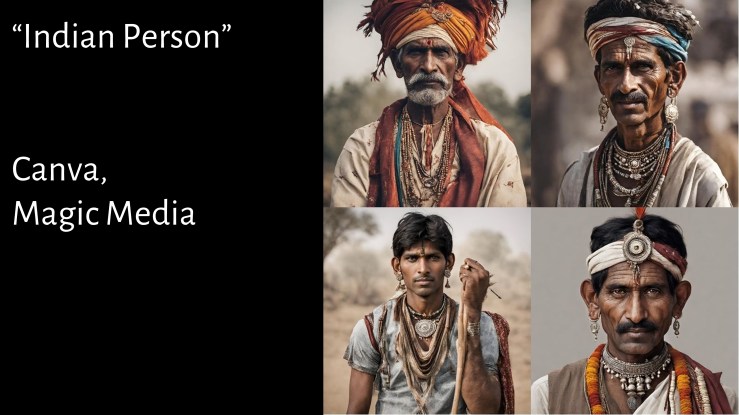

I decided to examine stereotypes more clearly and entered “Indian person,” aware of the dual use of “Indian”—Native American and Indian from India.

The results show very traditionalist, stereotypical depictions. If they show Native Americans, they display the typical stoic Indian looking expressionless or downward—masculine, traditional. I entered “person,” yet received four male-identifiable people. Two, maybe three, possibly all four, would likely appear Native American. Maybe one could pass as Indian from India, but all play with stereotypes.

I ran the same search again, and the computer apparently figured me out, showing the other type of Indian. Now we’re getting stereotypical depictions from what we might expect from India—though some could be confusing. Again, “Indian person” yields four men.

Let’s make it clearer but still ambiguous: “indigenous person.” Here come the headdresses and the stoic, somewhat depressed look—the “end of the trail” expression—yielding four unhappy-looking Native American stereotypes, all apparently male. Some faces look very similar, suggesting a limited database.

Running this again to see if we can get a woman—nope. “Indigenous person” again produces stoic faces, abundant headdresses, nudity, partial nudity, traditionalist, romantic, stereotypical depictions. Apparently, indigenous people aren’t modern.

Let’s specify “Native American”—it doesn’t improve much. I think I’ve seen the top left person on American Spirit cigarette packages. Some feather arrangements seem to depict different tribes, and the two on the right, to ensure it’s Native American, feature red, white, and blue. We’re Americanizing, and the blue also goes in a turquoise direction. I don’t know which tribe or nation this represents.

I still haven’t received a female-identified person, so let’s be more specific: “indigenous woman.” Here we go—now they’re women, female-identified, again traditionalist, stereotypical, stoic, slightly annoyed-looking, playing on the notion that indigenous people must look non-modern.

After playing with Indian, indigenous, Native concepts, what does “Pakistani person” look like? Surprisingly, “Pakistani person” produces a woman. Otherwise, we see traditionalist depictions—how else can you indicate Pakistani identity except through assumed traditional or stereotypical dress? We must have a desert picture with a barefooted person because the assumption is Pakistan equals Middle Eastern.

Business Stereotypes

Let’s examine the stereotype: “Jewish business person.” Oh boy. Again, all men. We see beards, glasses, apparently money. Business people apparently always have money, and if they’re Jewish, they need even more. In the top right corner, I can’t decide whether behind him is supposed to be a Star of David or Freemasonry symbology—AI isn’t good with such details, so it could be anything. The left upper picture presumably shows a mangled Star of David. The writing in both top pictures resembles weird calligraphy—not Hebrew, but the beard connotation is clear. This comes straight from Der Stürmer.

Let’s try “Palestinian business person.” You need stereotypical depictions and the scarf. There’s money involved but clearly less so—I don’t see gold or cultural items other than partially the dress. There seems more of an idea informing this.

How is a “German business person” assumed to look? Here we have various people looking nothing like me—all blondes with glasses. Germans and glasses apparently is a thing. Maybe. I do frustrate my optician by wanting only certain glasses types because I don’t care about fashion.

None look medieval—all appear modern, contrasting with previous examples. I don’t know what German culture is supposed to represent. I’ve seen German offices—this doesn’t look entirely wrong. The typical German file folders are missing, but okay, we find four German men straight from a Leni Riefenstahl Nazi propaganda movie.

As expected, “American business person”—yes. Americans like wearing their flag, but this is ridiculous. At least there’s a Black person and people of different ages—all men, because business persons apparently cannot be women. The flag display is absurd. Lower right looks more like Elvis pre-eating disorder.

Americans are known to be patriotic. What do the French look like in AI’s imagination? We see stubbly beards, hair, and the look supposedly meaning “I’m better than you”—everything French people are assumed to have. Not my experience. Lower left and right may resemble Paris because people don’t know what French culture really looks like. Upper right attempts a French flag. Otherwise, we try to associate Frenchness through architecture.

Many Germans look like that too, but apparently Germans must be blonde and French people brunette.

Let’s try “Nigerian business person.” It becomes more difficult—we don’t really know what makes these four men Nigerian. But apparently, they’re all assumed to be modern. You could say quite a few people live in modernity in Africa now. Nigeria is one of Africa’s leading countries, very successful, with many business people worldwide. But there’s no notion of culture. What is Nigerian about these four people?

Ethiopians apparently have women—there’s an Ethiopian woman in the upper right corner. Some notion of culture and geography appears in the upper left. There’s a more distinct AI idea about what Ethiopia might look like, contrasting with Nigeria. Nigeria doesn’t look like anything—we just see offices. At least here, we apparently know a little about the place of Earth’s earliest human habitation. It’s nice to see an assumption of modernity—that’s refreshing.

Let’s try “Liberia.” For those aware that Liberia is called Liberia because the United States didn’t want freed slaves and sent some people back, founding the land of free Africans—Liberia—and naming the capital after President Monroe—Monrovia. The flag resembles the American flag. But AI doesn’t know how it looks, producing strange distortions of American and Liberian flags that mesh together. AI doesn’t know how the country looks—we only see offices and men.

General People vs. Business People

Moving away from business people to just “American person.” I can agree that Tom Selleck or whoever’s in the upper right corner represents a successful American image. They gave him Indiana Jones’s hat, and all four people are draped in American flags. I’ve probably seen the lower right person throughout Oregon. I’m starting to look like that—slightly too overweight. We see the optimistic older American, and I don’t know what the upper left person represents—maybe Nicholas Cage. All ostentatiously wrapped in American flags—we get it, they’re American.

How does a “German person” look—not business person, but German person? Apparently, they look like World War I soldiers. I don’t know what the upper right person is wearing on their head—I cannot identify that. They’re apparently all old, and the lower left image—I have no words.

“Palestinian person.” Contrary to Africans, Europeans, Americans, Palestinians are depicted as living in sand, looking tired, exhausted, traditional but not modern. That’s apparently our Palestinian stereotype—not the kind of people I’ve met, showing the cultural attitude we have toward Palestine.

Age Bias

Let’s examine aging: “65-year-old person.” Again, no woman. I think the computer thinks 65 is advanced old age. These people may have been 65 fifty years ago, depending on where you assume they’re from. They look older than current 65-year-olds might. Maybe I’m spoiled—I’m accustomed to people from Eastern and Western Europe and the United States.

Let’s look at “65-year-old woman”—I have to specify “woman” again to show a woman. Remember, only Pakistani and Somali persons could be women otherwise. Again, this doesn’t represent current 65-year-olds. All look much older in the picture—we have assumptions that this number triggers beliefs about appearing much older than people probably are.

Beyond Humans: Aliens and Concepts

Let’s move beyond humans to “alien.” We’re familiar with the gray alien variant that apparently seems to be the canonical stereotype, influenced by Betty and Barney Hill. I was surprised there’s no Spock. All of these are fictional as far as I know, and they don’t look nice either. If you give me a gray alien, give me someone like Thor from Stargate—at least that’s a cute little fellow. There’s no E.T. or Alien movie alien either. They look like they could be mean. Their mouths are rather human, deviating from the gray stereotype. I don’t know whether the lower right one has handles or what mental illness is depicted in the lower left.

Speaking of mental illness, let’s examine concepts: “white supremacy.” I said I’d go there.

AI still can’t write in 2024. These look like some weird death cult with lettering. Upper left—some letters look Hebrew, some Roman, in cipher-like words like “wit” or “white.” There’s even “wild” lower left. Some look like decapitated wild German barbarians. Weird assemblages lower right—I can’t tell what that’s supposed to be. Is that supposed to be the Q-Anon Shaman, or some Ku Klux Klan-ish person? Upper right shows weirdly intertwined black and white bodies.

Whatever the computer thinks about white supremacy, it’s not good. Not that I think it’s good, but I wouldn’t come up with these pictures.

Let’s look at “fascist.” Computer can’t spell. Predictable color scheme, but also mixture between Nazi colors and American colors. Otherwise, typical depictions of brute masculinity. Actually, lower left shows even a woman depicted—women can be fascists, but not business persons. Interesting.

Lower right—I think the computer wasn’t sure how to distinguish communist and fascist, Nazi and American propaganda, so they combined everything. You could say that. I guess it’s supposed to be wheat—could be laurel on Caesar’s head. The middle guy has Hitler’s hairdo but a face with no markings.

The computer doesn’t approve, which isn’t bad. I just don’t know what it disapproves of.

“Zionism.” Top left could be huddled masses trying to reach their traditional homeland. Top right looks like cartoon history-telling. Bottom two are wild, combining American and mock Jewish symbology. It looks like the Capitol Building based on Middle Eastern or old Roman ruins. Clear indications of global conspiracy belief. I don’t know what to say.

If this isn’t dark enough, let’s search “Final Solution.” We see camps now. Lower right could be some French exile facing existential dread—I guess it’s an Eiffel Tower thing. I don’t know what the top right represents. The computer gets the emotional content right when thinking of Final Solution, but I don’t know what that is.

Trying again. Still can’t spell. Pseudo movie title. Top right is downright spooky—chimneys with roots hovering over what seems like a concentration camp. That’s actually very original—the computer did well creating whatever that represents, but it’s disturbing. Lower left—I think there are supposed to be skulls, but I don’t know what these spheres represent. Maybe it’s not primed for “alien” thinking and thought along sphere lines. Lower right is good—looks like evoking Guernica and Holocaust images.

Another try. Getting better, except for spelling. I don’t know what lower left represents—why there are two Eiffel Towers with weird round spaceship things. I watched the old British science fiction show The Tripods—it evokes that, but it’s odd. Otherwise, not doing badly.

Abstract Concepts

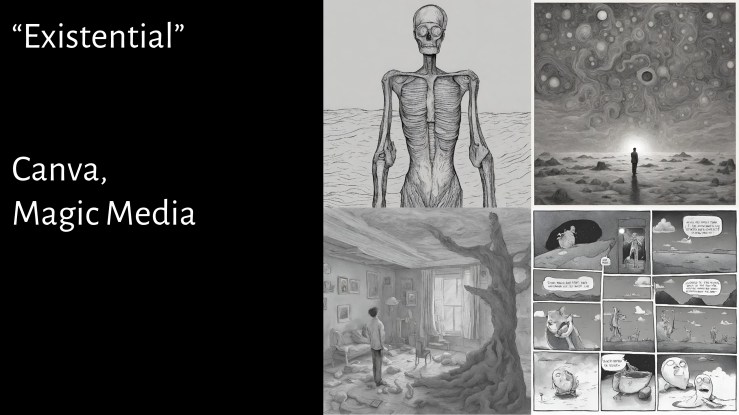

Let’s examine different levels: “existential dread.” I think AI really shines here, looking into a computer mind that has consumed too much dark human art. I hate when people say “this speaks for itself,” but this speaks for itself. If computers are afraid, this is what I’d assume they see.

Continuing with that theme—I think I primed the search when I searched for “alien” earlier. Lower left looks like the computer watched Eraserhead. Haven’t seen that movie? Brilliant David Lynch film. I’ve seen it but never want to see it again—so good and disturbing. Top left—I don’t know whether these are jellyfish or floating brains, but there’s something about circles and heads or alien-ish things.

Another try because that was fun. Weird skeleton, Van Gogh variant, strange cartoon, again something with tree and roots. These are little weird—the computer has watched much Eraserhead.

Let’s try something different: “happiness.” If that’s happiness, it disturbs me more than existential stuff. Flowers in top right, okay. But the sea of probably smiling people from a Studio Ghibli cartoon maybe—is that what the computer watched? Spirited Away? Is that what this represents? It’s disturbing.

It’s not as bad as this: “happiness.” The computer figured out teeth. White people are happy, girls are happy, children are happy, and all those white girls look alike. Teeth showing like chimpanzee grins to demonstrate biting capacity. I’m not fond of that grin—this is pathological and scary.

Let’s continue happily and ask what “utopia” looks like. Floating cities, towers of Babel with green. Maybe as predicted, I wouldn’t want to live there. Looks odd. Maybe Miyazaki influences. Goes back to some China stereotype about Asia being advanced—China allegedly, but Japan also for a while. I remember when Sony was the coolest thing ever, which I still think, but I’m old.

Utopia looks as predicted. So does “dystopia.” These could be movie posters for the next dystopia film. Nothing would need changing—just what you’d expect.

Maybe the computer went crazy. Let’s ask how a “crazy computer” looks. Why are these all Windows computers? It’s Windows. Crazy? I resent that as a longtime Windows user who started with Windows 3.1.

Let’s go to something happier—cats again. How would “cat on Mars” look? The cat owns the moon, isn’t bothered by lack of oxygen, different gravity, dust, and lack of mice. The cat just looks superior, as cats should.

What We’ve Learned

Now that we’ve seen all this, what’s the takeaway? “Person” means man unless Pakistani or Ethiopian. National stereotypes are alive and well. Concepts are difficult. Computers are weird. Whoever’s training these computers and doing metadata tagging—we all need to do better.

If this is what we teach computers about humanity, we have far to go. This explains why some technology doesn’t work if you’re different. This is why oxygen meters used during the pandemic don’t work well on Black skin—they’re based on seeing veins. This is why automatic cars may run over Black people—some Google searches associate Black people not with being human.

We’ve learned the old rule: garbage in, garbage out. This is what we teach our computers and the worldwide web. This happens with Google, Bing, or other searches—you get biased results. This AI represents the latest manifestation.

Except for the computer’s strange existentialism opinions, we get a reflection of ourselves—a computer that’s been looking at us and giving back images of what we probably think. I don’t like it, but it can be improved. I’m glad the computer likes cats. A cat on Mars doesn’t work currently—maybe in the future.

Otherwise, I hope that was entertaining and different.